Google Play Developer Id

Google has eliminated the apps after being notified of their malicious nature but this malware are still out there for download on third-party repositories. In April 2017, security firm Check Point announced that a malware named “FalseGuide” had been hidden inside approximately forty “sport information” apps in Google Play. The malware is able to gaining administrator entry to infected units, where it then receives extra modules that allow it show popup advertisements. The malware, a kind of botnet, is also able to launching DDoS attacks.

In November 2011, Google introduced Google Music, a bit of the Google Play Store offering music purchases. Also in March 2012, Android Market was re-branded as Google Play. By 2017, Google Play featured greater than three.5 million Android functions. After Google purged lots of apps from the Google Play Store, the variety of apps has risen back to over 3 million Android purposes.

Click on the View project link beneath Linked Google Cloud project. Complete your account setup by filling in your details and confirming registration. Click the “Invite new person” button in the top-right corner of the display. You will obtain an e mail from Google notifying you when your account is able to be activated. If you might be creating a model new account, fill out the shape to create your Google account, and click “NEXT”. A step-by-step information to search out your Google Play account data.

What Is A Google Developer Account?

Also learn how to create a new developer account, how to use the new Google Play Console and familiarize your self with the recent Google Play policies. Google Play Store filters the list of apps to these compatible with the user’s system. Developers can target particular hardware elements , software program elements , and Android variations (such as 7.zero Nougat). Carriers can also ban certain apps from being installed on customers’ units, for instance tethering applications.

- In December 2010, content filtering was added to Android Market, each app’s particulars web page started displaying a promotional graphic on the top, and the maximum dimension of an app was raised from 25megabytes to 50 megabytes.

- After the APK file is efficiently uploaded click on in retailer itemizing, in order to fill in all the information that will be seen in your app’s Google Play page.

- Some developers publishing on Google Play have been sued for patent infringement by “patent trolls”, people who own broad or vaguely worded patents that they use to focus on small builders.

- Google Play Newsstand was a information aggregator and digital newsstand service providing subscriptions to digital magazines and topical information feeds.

As of 2017, developers in additional than one hundred fifty areas may distribute apps on Google Play, although not every location supports service provider registration. Developers obtain 85% of the applying price, whereas the remaining 15% goes to the distribution partner and working charges. Developers can arrange gross sales, with the original price struck out and a banner beneath informing customers when the sale ends.

In 2017, the Bouncer characteristic and different safety measures inside the Android platform had been rebranded under the umbrella name Google Play Protect, a system that regularly scans apps for threats. Some system producers select to use their very own app store as an alternative of or in addition to the Google Play Store. In June 2018, Google introduced plans to shut down Play Music by 2020 and provided users to migrate to YouTube Music, migration to Google Podcasts was introduced in May 2020. In October 2020, the music retailer for Google Play Music was shutdown. Google Play Music was shut down in December 2020 and was changed by YouTube Music and Google Podcasts. Google Play Music was a music and podcast streaming service and online music locker.

Bhavna is a contract Android developer and educator from India. Learn about 5 major duties to arrange your utility for release. Also learn intimately about configuring your app for launch. “Android banking malware infects 300,000 Google Play customers”.

Once the APK file is ready, it will turn into obtainable for obtain within the My Applicationssection. You know the file is ready when the download APK button adjustments from grey to green. For an app with out push notifications, simply press the Publish button to begin out generating your app’s APK file.

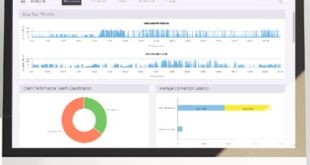

Depending on developer preferences, some apps may be put in to a telephone’s exterior storage card. The platform is important because it provides developers with entry to first get together knowledge (trustworthy information collected about an app’s audience that comes straight from Google Play) that highlights the true efficiency of an app. The console permits the monitoring of conversion charges ; it reveals the variety of impressions an app itemizing receives and the variety of installs an app receives from completely different sources over time. The person acquisition reviews found within the console present information on how an app is getting its development such as users’ origins in phrases of supply. (Google Play shows users’ site visitors source, for instance whether natural ‘explore traffic’ or paid site visitors from ‘third party referrals’). In 2012, Google began decoupling certain features of its Android working system so that they could possibly be up to date by way of the Google Play retailer independently of the OS.

Why The Google Play Developer Console Is Essential

However, it’s good to know that this feature exists as a outcome of Google may add future Google Play developer options, which could possibly be useful even to non-developers . Developers who are creating Android apps will typically ship check variations of the app to members of their staff. This new inside app sharing feature inside Google Play developer options makes that process easier.

Many Android customers — even those who have by no means developed an app of their lives — already know about Android developer choices. You need to know about this section of the OS if you finish up creating apps or when you wish to do things like unlock bootloaders, flash custom ROMs, or allow certain hidden options. However, now there are Google Play Store developer choices, a brand new set of options that applies to the Google Play Store only. Some developers publishing on Google Play have been sued for patent infringement by “patent trolls”, individuals who personal broad or vaguely worded patents that they use to focus on small builders. If the developer manages to efficiently problem the preliminary assertion, the “patent troll” modifications the declare of the violation in order to accuse the developer of getting violated a unique assertion in the patent.

How Much Does A Google & Apple Developer Account Value

After the APK file is successfully uploaded click in store itemizing, in order to fill in all the data that will be visible in your app’s Google Play web page. The icon will be introduced in the user’s mobile phone after putting in your app. How to add an extra person to your Google Play developer account. Now you have created your account you’ll need to ask our App Builder team to manage your developer account so we will automatically apply updates to your app. Having the Google Play Store developer choices enabled ought to create no problems.

Click the Add New Application button to start including your app to Google Play. Click the Download APK button, and obtain the file to your computer. Now we’ve every little thing ready so that you just can generate your app’s APK file. Once it’s loaded you’ll see a message saying “Our bots are finishing the account link” and you want to see the Authenticated badge on every authenticated app on the Manage Sources web page quickly.

A separate on-line hardware retailer known as the Google Store was introduced on March eleven, 2015, changing the Devices section of Google Play. On December four, 2019, Qualcomm introduced their Snapdragon 865 supports GPU drivers up to date via the Google Play Store. This feature was initially introduced with Android Oreo but distributors had not added assist but.

All official app shops require you to buy a developer account to be able to publish apps. This course is for Android developers who wish to publish their first Android app to the Google Play Store. This course expects you to be conversant in Android Studio and fundamentals of Kotlin programming language. You will also want a Google developer account to upload your app to the play retailer which presently has a 25 USD fee. Learn tips on how to construct a release version of your app and upload it to the Google Play Store for testing and final launch to finish users.

In July 2017, Google expanded its “Editors’ Choice” section to characteristic curated lists of apps deemed to supply good Android experiences inside overall themes, similar to fitness, video calling and puzzle games. It requires that developers charging for apps and downloads via Google Play must use Google Play’s fee system. The in-app billing system was initially introduced in March 2011. All builders on Google Play are required to function a physical address on the app’s page in Google Play, a requirement established in September 2014. Applications can be found via Google Play either freed from charge or at a value. They could be downloaded directly on an Android device through the proprietary Google Play Store cell app or by deploying the application to a tool from the Google Play website.

To enroll in the Google Play Developer program, you’ll need a Google account and to pay a one time $25 charge. You should enroll within the Apple Developer program as an Organization . To enroll in the Apple Developer program, you’ll must set up an Apple ID and pay a $99/year fee. If you’re a nonprofit or authorities agency, Apple will waive your payment; learn more. Finally, push the Publish app button and your utility will be obtainable on Google Play within a couple of hours. Push the Save Draft Button and if all the sections have a green check mark, your utility is prepared to be revealed.

ASO teams will must have the stats to have the ability to Increase impressions and installs, and monitor CVR over time. Only by analyzing these are ASO groups able to identify any areas of an app’s itemizing that need consideration or improvement and show where an app is successful, so as to iterate those parts and place them elsewhere on the product web page. Once you create an account, you have to wait forty eight hours for Google to substantiate your account. For free apps, Google costs no extra payment nevertheless it does take 30% income for paid apps on the platform. Learn how to create a new developer account and set your developer profile.

In all of 2017, over 700,000 apps were banned from Google Play because of abusive contents; this is a 70% improve over the number of apps banned in 2016. Google has also previously launched yearly lists of apps it deemed the “greatest” on Google Play. Launched in 2017, Google Play Instant, also known as Google Instant Apps, allows a user to use an app or game with out putting in it first.

Google Play Immediate Apps

Learn the way to use the totally different link codecs that Google offers to bring your users to your app’s house page, developer’s house page and so forth. Learn to create store itemizing by adding required textual content as nicely graphics. Also study to fill in associated part like App Content which includes Privacy Policy, App Access, Content ratings and Data Safety section and so on. In July 2017, Google described a model new security effort called “peer grouping”, by which apps performing comparable functionalities, similar to calculator apps, are grouped collectively and attributes compared.

- Payouts for builders happen each month on the 15th for the earlier month sales.

- App developers can respond to reviews utilizing the Google Play Developer Console.

- This goes into the License Key subject of the overall Google Play Store settings in BuildBox.

- At the Black Hat safety conference in 2012, safety agency Trustwave demonstrated their capacity to upload an app that may circumvent the Bouncer blocker system.

- Follow alongside right here, and we’ll tell you how to allow Google Play developer options, as nicely as what advantages it might bring.

- Google Play was launched on March 6, 2012, bringing collectively Android Market, Google Music, Google Movies and the Google eBookstore beneath one brand, marking a shift in Google’s digital distribution technique.

Users outdoors the countries/regions listed beneath solely have entry to free apps and games through Google Play. The Google Play Store app contains a historical past of all installed apps. Users can remove apps from the listing, with the changes additionally synchronizing to the Google Play web site interface, the place the option to remove apps from the historical past does not exist. Users can submit reviews and scores for apps and digital content distributed through Google Play, which are displayed publicly. App developers can respond to evaluations using the Google Play Developer Console. Until March 2015, Google Play had a “Devices” part for users to purchase Google Nexus units, Chromebooks, Chromecasts, different Google-branded hardware, and accessories.

Apple Developer Account #

Even after such apps are force-closed by the user, ads stay. Google eliminated some of the apps after receiving stories from Sophos, however some apps remained. In August 2017, 500 apps were faraway from Google Play after safety agency Lookout discovered that the apps contained an SDK that allowed for malicious advertising. The apps had been collectively downloaded over 100 million instances, and consisted of a extensive variety of use cases, together with health, weather, photo-editing, Internet radio and emoji. Google publishes the source code for Android via its “Android Open Source Project”, permitting lovers and builders to program and distribute their own modified variations of the operating system. However, not all these modified versions are suitable with apps developed for Google’s official Android versions.

This not only offers you an increased sense of brand name possession – but in addition improves your visibility on the Store and helps you get discovered. If, for some cause, yours didn’t, simply update it from the app’s Settings. Google Play developer choices function independently of the primary Android choices and have to be enabled in a unique way. Follow alongside right here, and we’ll let you know how to enable Google Play developer options, as well as what advantages it may convey. Most folks know about Android developer options, however now there is a Google Play version. By utilizing this site, you conform to the Terms of Use and Privacy Policy.

One of these elements, Google Play Services, is a closed-source system-level process providing APIs for Google companies, put in mechanically on practically all devices operating Android 2.2 “Froyo” and higher. With these changes, Google can add new system functionality by way of Play Services and replace apps with out having to distribute an improve to the working system itself. As a end result, Android 4.2 and 4.3 “Jelly Bean” contained relatively fewer user-facing modifications, focusing extra on minor changes and platform improvements. Google Play Store, shortened to Play Store on the Home display screen and App display screen, is Google’s official pre-installed app retailer on Android-certified units.

Software Bans

Industry experts know that Google takes first time installs into account when they’re making all their rating and that includes decisions in their retailer. MMP’s, who also provide tools for analysis can only show the variety of customers who installed and launched, they can’t differentiate. If these customers had been to be taken into consideration it might be unimaginable to view the figure as a true representation of first time installs which implies that the first set up information from Google is more priceless to ASO teams. In order to really understand and improve CVR, it’s important to know exact first time install figures. Google Developer Account prices $25 and allows customers to publish apps on the Google Play Store solely. Apple also provides an upgraded Enterprise account which costs 299$ per year.

Atech Guides Android APPS

Atech Guides Android APPS